Interconnecting Doris with the Hive Data Source

By connecting to Hive Metastore, or a metadata service compatible with Hive Metastore, Doris can automatically obtain Hive database table information and perform data queries. This avoids complex manual mapping and data migration when there is a large number of conventional external data directories.

Prerequisites

- You have created an MRS cluster. For details, see "Getting Started" > "Purchasing and Using an MRS Cluster" in the MapReduce Service User Guide.

- Create a Doris cluster.

- To ensure network connectivity, the MRS cluster must have the same VPC and subnet as the Doris cluster, and all node IP addresses of the Doris cluster must be added to the security group of the MRS cluster.

Step 1: Creating an MRS Hive Connection

- Log in to the CloudTable management console.

- On the Cluster Management page, click the target cluster to go to the cluster details page.

- Choose Connection Management > Create. The Create page is displayed.

- Set the connection type:

- Set Authentication Type to SIMPLE and disable OBS Storage.

- Set Authentication Type to KERBEROS and disable OBS Storage.

- Set Authentication Type to SIMPLE and enable OBS Storage.

- Set Authentication Type to KERBEROS and enable OBS Storage.

Table 1 Parameter description Parameter

Description

Connection Name

Connection name. You can enter a name indicating the type of the data source to be connected.

NOTE:A connection name must start with a letter and contain 4 to 64 letters, digits, and hyphens (-). It cannot contain other special characters.

Connector

External data directory component supported by Doris. Currently, only Hive is available.

Hadoop Type

Hadoop component supported by Doris. Currently, only MRS is available.

Cluster Name

Name of an MRS cluster.

NOTE:Doris can connect to only one user in a Kerberos cluster.

Manager IP

Floating IP address of MRS Manager. After you select the cluster to be connected, the IP address is automatically filled in.

Authentication Type

MRS cluster authentication type. Values of this parameter include KERBEROS (for secure clusters) and SIMPLE (for common clusters). After you select the cluster to be connected, this parameter is automatically filled in.

NOTE:Only one KERBEROS connection can be created.

Connector Version

Version of the data source component of the MRS cluster. Hive supports version 3.x. The value can be 3.X.

Username

Username of the MRS cluster. The user must have adequate permissions to access the underlying data of Hive and HDFS. If you want to create a user, see .

Password

Password of the MRS user mrs_user_name.

OBS Storage

When this toggle is on, the data table associated with the created catalog connection is stored in OBS. When toggled off, the data table associated with the catalog connection is stored in HDFS.

- After setting the parameters, click Test. If the test fails, check whether the username and password are correct.

- After the test is complete, click OK. The created connection record will be displayed on the Connection Management page.

Step 2: Running SQL Commands to Create a Hive Catalog Table

- Use the SSH login tool to remotely log in to the Linux ECS through the EIP.

For details, see "Instance" > "Logging In to a Linux ECS" > "Logging In to an ECS Using an SSH Password" in the Elastic Cloud Server User Guide.

- Access the Doris cluster. For details, see Using the MySQL Client to Connect to a Common Doris Cluster.

- Create a Hive catalog table.

- Create a catalog table with the authentication type set to SIMPLE to access Hive data stored in HDFS.CREATE CATALOG hive_catalog_simple PROPERTIES ('type'='hms','hive.metastore.uris'='thrift://192.X.X.X:port,thrift://192.x.x.x:port','hive.metastore.sasl.enabled' = 'false','dfs.nameservices'='hacluster','dfs.ha.namenodes.hacluster'='3,4','dfs.namenode.rpc-address.hacluster.3'='192.x.x.x:port','dfs.namenode.rpc-address.hacluster.4'='192.x.x.x:port','dfs.client.failover.proxy.provider.hacluster'='***','hive.version' = '3.1.0');

- Create a catalog table with the authentication type set to KERBEROS to access Hive data stored in HDFS.CREATE CATALOG hive_catalog PROPERTIES ('type'='hms','hive.metastore.uris' = 'thrift://192.x.x.x:port,thrift://192.x.x.x:port','hive.metastore.sasl.enabled' = 'true','hive.server2.thrift.sasl.qop'='auth-conf','hive.server2.authentication' = 'KERBEROS','hive.server2.authentication.kerberos.principal' = '***','hive.metastore.kerberos.principal' = '***','dfs.nameservices'='hacluster','dfs.ha.namenodes.hacluster'='3,4','dfs.namenode.rpc-address.hacluster.3'='192.x.x.x:port','dfs.namenode.rpc-address.hacluster.4'='192.x.x.x:port','dfs.client.failover.proxy.provider.hacluster'='***','hadoop.security.authentication'='kerberos','hadoop.kerberos.principal' = '{kinit_result}', -- Result of kinit {USER_NAME}'hive.version' = '3.1.0','fs.defaultFS'='hdfs://hacluster','hadoop.rpc.protection'='privacy');

- Create a catalog table with the authentication type set to SIMPLE and access Hive data stored in OBS.CREATE CATALOG hive_catalog_simple_obs PROPERTIES ('type'='hms','hive.metastore.uris'='thrift://192.x.x.x:port,thrift://192.x.x.x:port','obs.access_key' = '***','obs.secret_key' = '***','obs.endpoint' = '***','obs.region' = '***','hive.metastore.sasl.enabled' = 'true','hive.version' = '3.1.0');

- Create a catalog table with the authentication type set to KERBEROS and access Hive data stored in OBS.CREATE CATALOG hive_catalog_OBS PROPERTIES ('type'='hms','hive.metastore.uris' = 'thrift://192.x.x.x:port,thrift://192.x.x.x:port','hive.metastore.sasl.enabled' = 'true','hive.server2.thrift.sasl.qop'='auth-conf','hive.server2.authentication' = 'KERBEROS','hive.server2.authentication.kerberos.principal' = '***','hive.metastore.kerberos.principal' = '***','hadoop.security.authentication'='kerberos','hadoop.kerberos.principal' = 'USER_NAME','hive.version' = '3.1.0','fs.defaultFS'='hdfs://hacluster','hadoop.rpc.protection'='privacy','obs.access_key' = '***','obs.secret_key' = '***','obs.endpoint' = '***','obs.region' = '***');

The following table describes related parameters and how to obtain parameter values.

Table 2 Parameter description Parameter

Description

type

Type of the external data to be connected.

hive.metastore.uris

Hive metadata URI, which can be viewed in the hive-site.xml configuration file.

hive.metastore.sasl.enabled

Obtain the value in the hive-site.xml configuration file.

dfs.nameservices

Obtain the value in the hdfs-site.xml configuration file.

dfs.ha.namenodes.hacluster

Obtain the value in the hdfs-site.xml configuration file.

dfs.namenode.rpc-address.hacluster.3

Obtain the value in the hdfs-site.xml configuration file.

NOTE:Obtain the IP address of this parameter on FusionInsight Manager. Log in to the home page of the FusionInsight Manager, choose the target component and click the corresponding instance.

dfs.namenode.rpc-address.hacluster.4

Obtain the value in the hdfs-site.xml configuration file.

NOTE:Obtain the IP address of this parameter on FusionInsight Manager. Log in to the home page of the FusionInsight Manager, choose the target component and click the corresponding instance.

dfs.client.failover.proxy.provider.hacluster

Obtain the value in the hdfs-site.xml configuration file.

hive.version

Hive version.

hive.server2.thrift.sasl.qop

Obtain the value in the hive-site.xml configuration file.

hive.server2.authentication

Authentication type, which can be viewed on the Connection Management page.

hive.server2.authentication.kerberos.principal

Obtain the value in the hive-site.xml configuration file.

hive.metastore.kerberos.principal

Obtain the value in the hive-site.xml configuration file.

hadoop.kerberos.principal

Obtain the value of hadoop.kerberos.principal as follows:

- Method 1:

- Log in to the MRS Manager page of the cluster.

- Choose System > Permission > User to access the user page and obtain the user.

- Click Domain and Mutual Trust to access the Domain and Mutual Trust page and obtain the local domain.

- The parameter value is in the format of User name+Local domain.

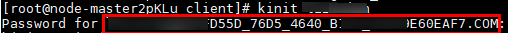

- Method 2:

- Log in to the node where the MRS client is located as user root.

- Go to the client installation path.cd /opt/Bigdata/client/

- Run the following command to load the environment variables:source bigdata_env

- Run the kinit command to authenticate the user and obtain the parameter value.kinit Username

hive.version

Hive version.

fs.defaultFS

Obtain the value in the core-site.xml configuration file.

hadoop.rpc.protection

Obtain the value in the core-site.xml configuration file.

obs.access_key

Access key. For details about how to obtain the access key, see section "Access Keys" in My Credentials User Guide.

NOTE:obs.access_key can be used to access underlying data files.

obs.secret_key

Secret key. For details about how to obtain the access key, see section "Access Keys" in My Credentials User Guide.

obs.endpoint

OBS address. Contact technical support to obtain the address.

obs.region

OBS region, which can be viewed on the OBS console.

- Log in to the node (Master1) where the MRS client is deployed as user root.

- Go to the client directory. This directory contains the folders of all MRS components. Currently, only the folders of HDFS and Hive are displayed.cd /opt/Bigdata/client/Note

The HDFS folder contains the core-site.xml and hdfs-site.xml configuration files and the Hive folder contains the hive-site.xml configuration file.

- Obtain the parameter values in these configuration files.

- Check the HDFS component.cd ./HDFS/hadoop/etc/

Go to the hadoop directory.

cd hadoopCheck the hdfs-site.xml configuration file and find the related parameters.

cat hdfs-site.xml - View the hive-site.xml configuration file of the Hive component and search for related parameters.cat /opt/Bigdata/client/Hive/config/hive-site.xml

- Check the HDFS component.

- Method 1:

- Create a catalog table with the authentication type set to SIMPLE to access Hive data stored in HDFS.

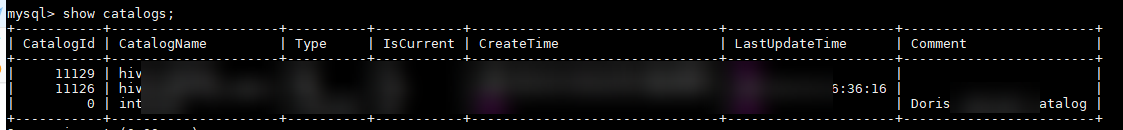

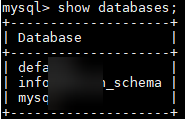

Step 3: Querying the Data Mapping Table

- View catalogs.show catalogs;

- Run the following command to query the databases in a catalog:show databases from catalog name;

- Switch to the specified catalog.switch catalog name;

- View the specified table in a database.show tables from 'catalog name'.'database';

Specify the table.

select * from 'catalog name'.'database name'.'table name';NoteThe use database; command is intended solely for testing environments with a limited number of data tables. Use this command with extreme caution in production clusters. In environments with a large number of data tables, executing this command will load metadata for all tables, potentially causing a significant surge in pressure on the Hive Metastore. This increased load can negatively impact the stability and performance of the MRS production environment.

FAQs

- If the following error message is displayed, perform a active/standby switchover for HDFS nodes on FusionInsight Manager.mysql> select * from hive_hdfs_test2;ERROR 1105 (HY000): errCode = 2, detailMessage = get file split failed for table: hive_hdfs_test2, err: org.apache.hadoop.ipc.RemoteException: Operation category READ is not supported in state standby. Visit https://s.apache.org/sbnn-errorat org.apache.hadoop.hdfs.server.namenode.ha.StandbyState.checkOperation(StandbyState.java:108)at org.apache.hadoop.hdfs.server.namenode.NameNode$NameNodeHAContext.checkOperation(NameNode.java:2200)at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkOperation(FSNamesystem.java:

- When creating a Catalog, you can use the parameter file.meta.cache.ttl-second to set the automatic expiration time of the Hive partition file cache, or set this value to 0 (unit: second) to disable the partition file cache. The following provides an example.CREATE CATALOG hive_catalog PROPERTIES ('type'='hms','hive.metastore.uris' = 'thrift://127.x.x.x:port','obs.access_key' = '***','obs.secret_key' = '***','obs.endpoint' = '***','obs.region' = '***''file.meta.cache.ttl-second' = '60');

- Prerequisites

- Step 1: Creating an MRS Hive Connection

- Step 2: Running SQL Commands to Create a Hive Catalog Table

- Step 3: Querying the Data Mapping Table

- FAQs