Scheduling EVS Disks Across AZs Using csi-disk-topology

Background

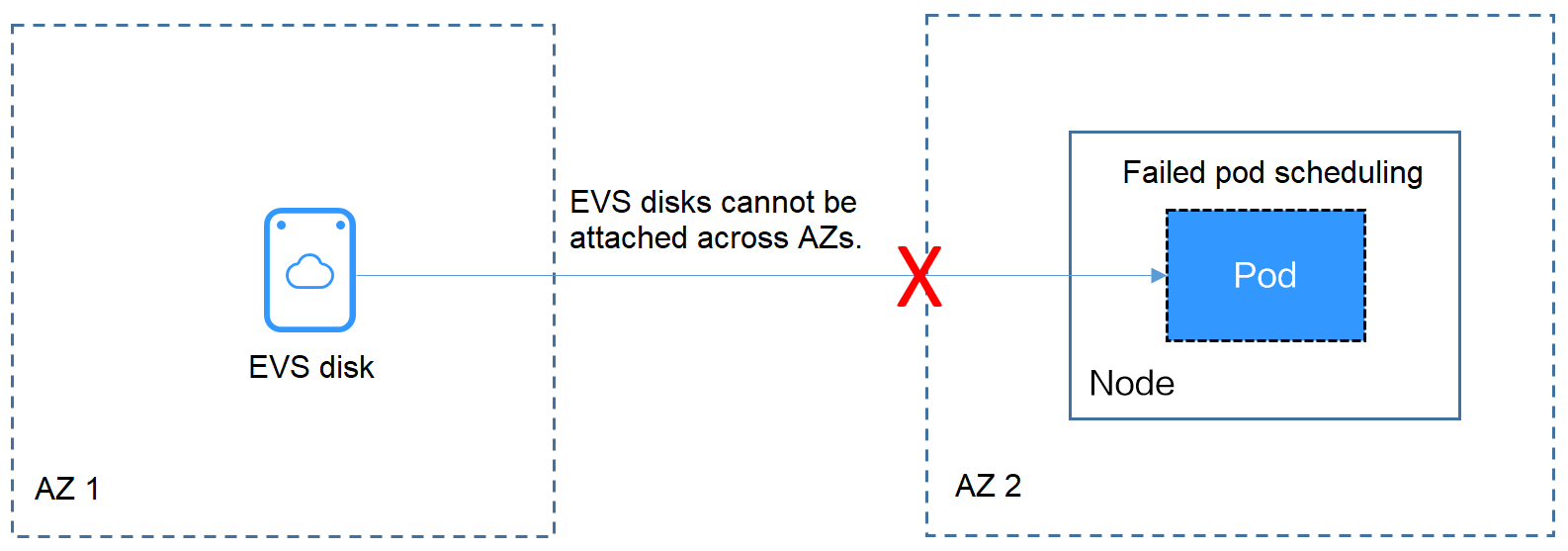

EVS disks cannot be attached to a node deployed in another AZ. For example, the EVS disks in AZ 1 cannot be attached to a node in AZ 2. If the storage class csi-disk is used for StatefulSets, when a StatefulSet is scheduled, a PVC and a PV are created immediately (an EVS disk is created along with the PV), and then the PVC is bound to the PV. However, when the cluster nodes are located in multiple AZs, the EVS disk created by the PVC and the node to which the pods are scheduled may be in different AZs. As a result, the pods fail to be scheduled.

Solution

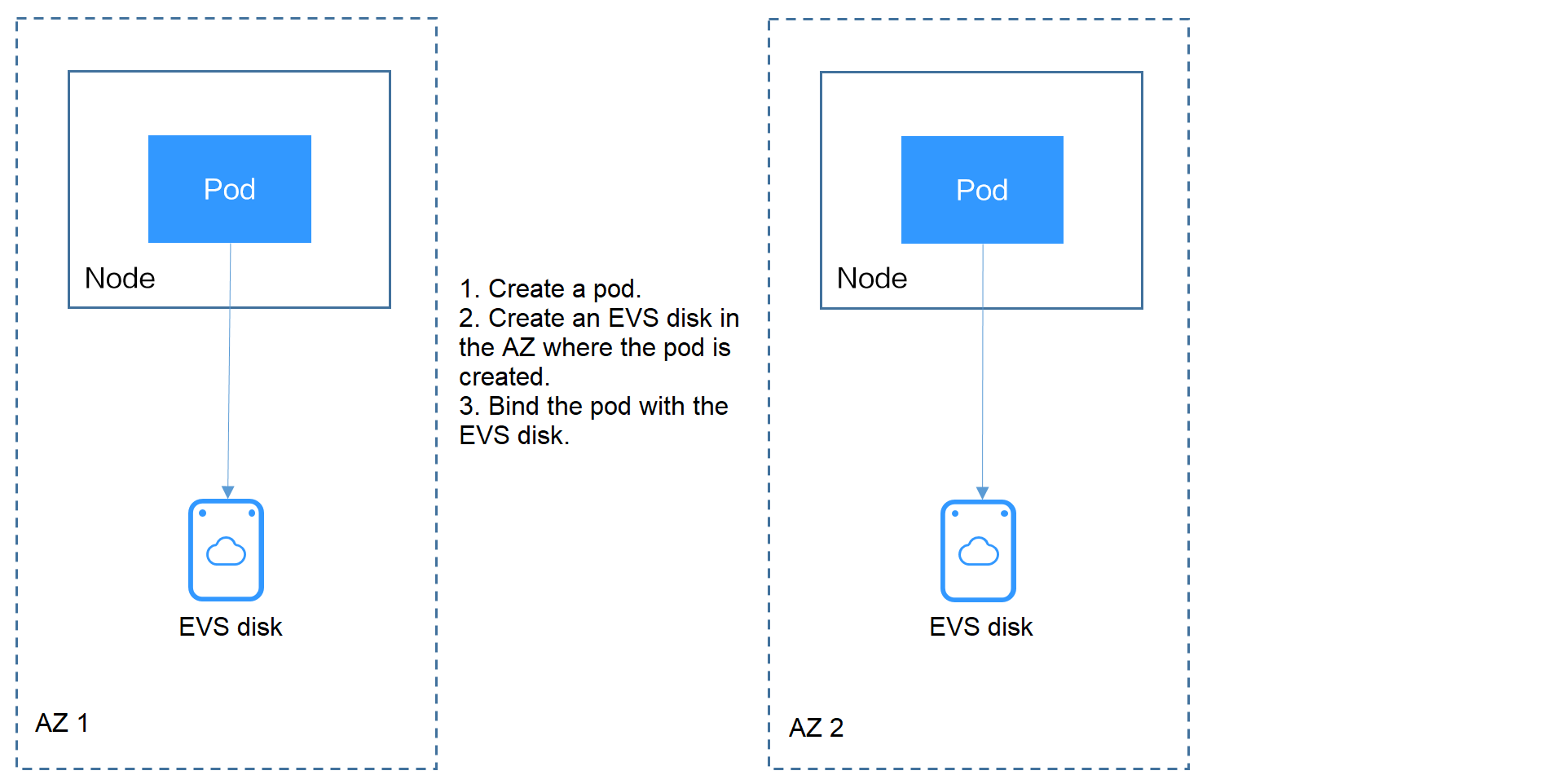

CCE provides a storage class named csi-disk-topology, which is a late-binding EVS disk type. When you use this storage class to create a PVC, no PV will be created in pace with the PVC. Instead, the PV is created in the AZ of the node where the pod will be scheduled. An EVS disk is then created in the same AZ to ensure that the EVS disk can be attached and the pod can be successfully scheduled.

Failed Pod Scheduling Due to csi-disk Used in Cross-AZ Node Deployment

Create a cluster with three nodes in different AZs.

Use the csi-disk storage class to create a StatefulSet and check whether the workload is successfully created.

apiVersion: apps/v1kind: StatefulSetmetadata:name: nginxspec:serviceName: nginx # Name of the headless Servicereplicas: 4selector:matchLabels:app: nginxtemplate:metadata:labels:app: nginxspec:containers:- name: container-0image: nginx:alpineresources:limits:cpu: 600mmemory: 200Mirequests:cpu: 600mmemory: 200MivolumeMounts: # Storage mounted to the pod- name: datamountPath: /usr/share/nginx/html # Mount the storage to /usr/share/nginx/html.imagePullSecrets:- name: default-secretvolumeClaimTemplates:- metadata:name: dataannotations:everest.io/disk-volume-type: SASspec:accessModes:- ReadWriteOnceresources:requests:storage: 1GistorageClassName: csi-disk

The StatefulSet uses the following headless Service.

apiVersion: v1kind: Service # Object type (Service)metadata:name: nginxlabels:app: nginxspec:ports:- name: nginx # Name of the port for communication between podsport: 80 # Port number for communication between podsselector:app: nginx # Select the pod whose label is app:nginx.clusterIP: None # Set this parameter to None, indicating the headless Service.

After the creation, check the PVC and pod status. In the following output, the PVC has been created and bound successfully, and a pod is in the Pending state.

# kubectl get pvc -owideNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE VOLUMEMODEdata-nginx-0 Bound pvc-04e25985-fc93-4254-92a1-1085ce19d31e 1Gi RWO csi-disk 64s Filesystemdata-nginx-1 Bound pvc-0ae6336b-a2ea-4ddc-8f63-cfc5f9efe189 1Gi RWO csi-disk 47s Filesystemdata-nginx-2 Bound pvc-aa46f452-cc5b-4dbd-825a-da68c858720d 1Gi RWO csi-disk 30s Filesystemdata-nginx-3 Bound pvc-3d60e532-ff31-42df-9e78-015cacb18a0b 1Gi RWO csi-disk 14s Filesystem# kubectl get pod -owideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx-0 1/1 Running 0 2m25s 172.16.0.12 192.168.0.121 <none> <none>nginx-1 1/1 Running 0 2m8s 172.16.0.136 192.168.0.211 <none> <none>nginx-2 1/1 Running 0 111s 172.16.1.7 192.168.0.240 <none> <none>nginx-3 0/1 Pending 0 95s <none> <none> <none> <none>

The event information of the pod shows that the scheduling fails due to no available node. Two nodes (in AZ 1 and AZ 2) do not have sufficient CPUs, and the created EVS disk is not in the AZ where the third node (in AZ 3) is located. As a result, the pod cannot use the EVS disk.

# kubectl describe pod nginx-3Name: nginx-3...Events:Type Reason Age From Message---- ------ ---- ---- -------Warning FailedScheduling 111s default-scheduler 0/3 nodes are available: 3 pod has unbound immediate PersistentVolumeClaims.Warning FailedScheduling 111s default-scheduler 0/3 nodes are available: 3 pod has unbound immediate PersistentVolumeClaims.Warning FailedScheduling 28s default-scheduler 0/3 nodes are available: 1 node(s) had volume node affinity conflict, 2 Insufficient cpu.

Check the AZ where the EVS disk created from the PVC is located. It is found that data-nginx-3 is in AZ 1. In this case, the node in AZ 1 has no resources, and only the node in AZ 3 has CPU resources. As a result, the scheduling fails. Therefore, there should be a delay between creating the PVC and binding the PV.

Storage Class for Delayed Binding

If you check the cluster storage class, you can see that the binding mode of csi-disk-topology is WaitForFirstConsumer, indicating that a PV is created and bound when a pod uses the PVC. That is, the PV and the underlying storage resources are created based on the pod information.

# kubectl get storageclassNAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGEcsi-disk everest-csi-provisioner Delete Immediate true 156mcsi-disk-topology everest-csi-provisioner Delete WaitForFirstConsumer true 156mcsi-nas everest-csi-provisioner Delete Immediate true 156mcsi-obs everest-csi-provisioner Delete Immediate false 156m

VOLUMEBINDINGMODE is displayed if your cluster is v1.19. It is not displayed in clusters of v1.17 or v1.15.

You can also view the binding mode in the csi-disk-topology details.

# kubectl describe sc csi-disk-topologyName: csi-disk-topologyIsDefaultClass: NoAnnotations: <none>Provisioner: everest-csi-provisionerParameters: csi.storage.k8s.io/csi-driver-name=disk.csi.everest.io,csi.storage.k8s.io/fstype=ext4,everest.io/disk-volume-type=SAS,everest.io/passthrough=trueAllowVolumeExpansion: TrueMountOptions: <none>ReclaimPolicy: DeleteVolumeBindingMode: WaitForFirstConsumerEvents: <none>

Create PVCs of the csi-disk and csi-disk-topology classes. Observe the differences between these two types of PVCs.

- csi-diskapiVersion: v1kind: PersistentVolumeClaimmetadata:name: diskannotations:everest.io/disk-volume-type: SASspec:accessModes:- ReadWriteOnceresources:requests:storage: 10GistorageClassName: csi-disk # StorageClass

- csi-disk-topologyapiVersion: v1kind: PersistentVolumeClaimmetadata:name: topologyannotations:everest.io/disk-volume-type: SASspec:accessModes:- ReadWriteOnceresources:requests:storage: 10GistorageClassName: csi-disk-topology # StorageClass

View the PVC details. As shown below, the csi-disk PVC is in Bound state and the csi-disk-topology PVC is in Pending state.

# kubectl create -f pvc1.yamlpersistentvolumeclaim/disk created# kubectl create -f pvc2.yamlpersistentvolumeclaim/topology created# kubectl get pvcNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGEdisk Bound pvc-88d96508-d246-422e-91f0-8caf414001fc 10Gi RWO csi-disk 18stopology Pending csi-disk-topology 2s

View details about the csi-disk-topology PVC. You can see that "waiting for first consumer to be created before binding" is displayed in the event, indicating that the PVC is bound after the consumer (pod) is created.

# kubectl describe pvc topologyName: topologyNamespace: defaultStorageClass: csi-disk-topologyStatus: PendingVolume:Labels: <none>Annotations: everest.io/disk-volume-type: SASFinalizers: [kubernetes.io/pvc-protection]Capacity:Access Modes:VolumeMode: FilesystemUsed By: <none>Events:Type Reason Age From Message---- ------ ---- ---- -------Normal WaitForFirstConsumer 5s (x3 over 30s) persistentvolume-controller waiting for first consumer to be created before binding

Create a workload that uses the PVC. Set the PVC name to topology.

apiVersion: apps/v1kind: Deploymentmetadata:name: nginx-deploymentspec:selector:matchLabels:app: nginxreplicas: 1template:metadata:labels:app: nginxspec:containers:- image: nginx:alpinename: container-0volumeMounts:- mountPath: /tmp # Mount pathname: topology-examplerestartPolicy: Alwaysvolumes:- name: topology-examplepersistentVolumeClaim:claimName: topology # PVC name

After the PVC is created, check the PVC details. You can see that the PVC is bound successfully.

# kubectl describe pvc topologyName: topologyNamespace: defaultStorageClass: csi-disk-topologyStatus: Bound....Used By: nginx-deployment-fcd9fd98b-x6tbsEvents:Type Reason Age From Message---- ------ ---- ---- -------Normal WaitForFirstConsumer 84s (x26 over 7m34s) persistentvolume-controller waiting for first consumer to be created before bindingNormal Provisioning 54s everest-csi-provisioner_everest-csi-controller-7965dc48c4-5k799_2a6b513e-f01f-4e77-af21-6d7f8d4dbc98 External provisioner is provisioning volume for claim "default/topology"Normal ProvisioningSucceeded 52s everest-csi-provisioner_everest-csi-controller-7965dc48c4-5k799_2a6b513e-f01f-4e77-af21-6d7f8d4dbc98 Successfully provisioned volume pvc-9a89ea12-4708-4c71-8ec5-97981da032c9

Using csi-disk-topology in Cross-AZ Node Deployment

The following uses csi-disk-topology to create a StatefulSet with the same configurations used in the preceding example.

volumeClaimTemplates:- metadata:name: dataannotations:everest.io/disk-volume-type: SASspec:accessModes:- ReadWriteOnceresources:requests:storage: 1GistorageClassName: csi-disk-topology

After the creation, check the PVC and pod status. As shown in the following output, the PVC and pod can be created successfully. The nginx-3 pod is created on the node in AZ 3.

# kubectl get pvc -owideNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE VOLUMEMODEdata-nginx-0 Bound pvc-43802cec-cf78-4876-bcca-e041618f2470 1Gi RWO csi-disk-topology 55s Filesystemdata-nginx-1 Bound pvc-fc942a73-45d3-476b-95d4-1eb94bf19f1f 1Gi RWO csi-disk-topology 39s Filesystemdata-nginx-2 Bound pvc-d219f4b7-e7cb-4832-a3ae-01ad689e364e 1Gi RWO csi-disk-topology 22s Filesystemdata-nginx-3 Bound pvc-b54a61e1-1c0f-42b1-9951-410ebd326a4d 1Gi RWO csi-disk-topology 9s Filesystem# kubectl get pod -owideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx-0 1/1 Running 0 65s 172.16.1.8 192.168.0.240 <none> <none>nginx-1 1/1 Running 0 49s 172.16.0.13 192.168.0.121 <none> <none>nginx-2 1/1 Running 0 32s 172.16.0.137 192.168.0.211 <none> <none>nginx-3 1/1 Running 0 19s 172.16.1.9 192.168.0.240 <none> <none>

- Background

- Solution

- Failed Pod Scheduling Due to csi-disk Used in Cross-AZ Node Deployment

- Storage Class for Delayed Binding

- Using csi-disk-topology in Cross-AZ Node Deployment